Much of the content that I create on my blog has value beyond just the day it was posted. This is what is known as ‘ever green’ content. I wanted a way that I could ensure that the best of this ‘ever green’ content gets posted to social media on a regular basis. This will allow new followers of mine to discover information they may not have seen since they first started following me.

Thus, for this exercise I want to have a process where I can list tweets I want to recur and have them automatically scheduled to be sent. I am currently using a third party service to achieve some of this but it does lack some features and flexibility so I decided to have a crack at soling this challenge using Microsoft Flow.

Now, I will fully admit up front that I am still bumbling around Microsoft Flow and finding out how things work. There are probably better and more efficient ways to achieve what I’m doing here but this is just my initial step and I plan to continually improve this over time. However, if you have any suggestions or know how I can do things better, by all means please let me know.

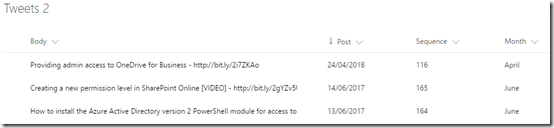

To start with, I needed a location to store my tweets. I therefore created a custom list in SharePoint in Office 365.

The Body field is a text field of 140 characters max (as this is how long a single tweet can be) and contains what will be tweeted. The Post field is that day (date) that I want the tweet to be sent. My plan is to scan this Post field, find today’s date, then use the Body field from the matching record in the actual tweet that gets sent out. What I then wanted to do was add one year to this Post date so the same tweet would then be scheduled to re-occur next year. I’ll get to the challenges I encountered doing this shortly.

I also created a unique sequence number for each tweet so I could easily track which tweet I was dealing with in a field called Sequence. I could have used the SharePoint ID field but I wanted more control, so I created my own.

What I also wanted to be able to do was group my tweets by month (I was thinking about doing things like; May is the month of Azure and the like down the track). This field, called Month, is a calculated field as shown above. It basically gets the month value from the Post field.

Now the Next Post field was the way I solved re-scheduling the tweet for the following year. I tried all sorts of things in Microsoft Flow to update the existing Post field but none worked, so I decided to simply use a calculated field in SharePoint which you can see above. The Next Post field basically takes the date in the Post field and adds one year using the formula above. I’ll use this Next Post field to update the Post field after the tweet has occurred in the Microsoft Flow.

So now onto building the Microsoft Flow.

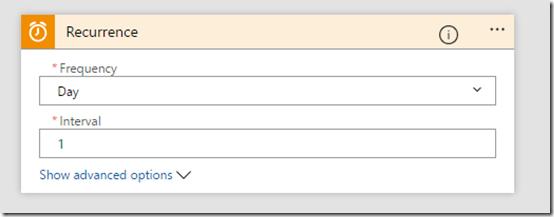

So I started with a Recurrence block. I want a tweet to go out daily so I set that here. You when you are testing, you may want to change this down to something like 1 minute so you can make sure things are working. You can always change it back once you are confident in your code.

The other thing that I would like to do is actually be able to exactly set what time in the day this recurrence happens. I couldn’t see how to do that so when you run the Microsoft Flow for this first time, this will be the starting recurrence point. Thus, run your Flow at the time you want it to automatically tweet during the day.

So once Microsoft Flow is activated on the daily schedule it then needs to go to the SharePoint list (here, Tweets 2) where my list of tweets is saved. It needs to get the items in this SharePoint list, so add the SharePoint Get Items block to your code and point it to the appropriate Site Address and List Name as shown above.

Now what you need to do is add a Condition block to check the SharePoint list and find a match for today’s date so you know what tweet to send. This proved a little challenging so I needed to go into the advanced mode and check the field Post using the following condition:

@equals(item()?[‘Post’], utcnow(‘yyyy-MM-dd’))

This basically will find the row in my tweets list that matches today’s date. It does that by looking through the Posts field for a match.

I couldn’t find how to do a time check in my local time zone. The only option seemed to be UTC. Again, a bit more research and I’m sure I can work it but for now my condition statement is checking UTC not my local time zone. That can mean I end up posting a different tweet from the day in my list. For now, that isn’t a big issues as I just want a tweet posted daily.

So now, thanks to the Conditional statement, we have found the record we want to tweet for the day so in the YES condition we need to add an action block to actually send out a tweet. The contents of the tweet will be the Body field. You use the Post a tweet action block to enable this.

I then added a SharePoint Update Item action block to increment the date in the Post field one year. As I said earlier, I played around with different formulas to achieve this in Microsoft Flow but didn’t have any luck. I solved it simply by adding an additional field to the SharePoint list that contains the Post date plus one year (that field I called Next Post).

Here, I update the Post field with the Next Post field so now the tweet has gone, it will automatically be rescheduled for the same date next year. And because Next Post is a calculated field it will automatically up date to a further year out ready for the next time the tweet gets posted.

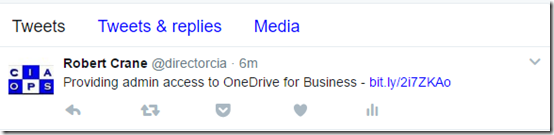

When I now run my Microsoft Flow you can see that a tweet from my original SharePoint list was tweeted. For the eagle eyed, you will notice this is in fact the second listed tweet from SharePoint. That’s because of the UTC timing in the conditional statement I mentioned earlier. Thus, tweet 116 was sent, not 115. No issues but now you can also probably see why I decided to give each tweet its own unique sequence number. Makes it much easier to follow what’s being sent.

Once the tweet has been sent I look at my SharePoint list and I see that tweet 116 has had it’s Post field incremented by one year as expected (to 2018).

As I said, this is far from a complete or perfect solution. At this stage it does the job and now gives me a basis for improving and enhancing what’s there. I’ll provide updated articles when I add major improvements to this Microsoft Flow, but for now I’ve very happy with how quickly I could get this working.

Some improvements I’m now thinking of:

– Having a posted tweet simply go to the end of the queue of posts (i.e. last date) rather than adding one year to it.

– Categorising tweets via their content and being able to schedule tweets based on their tags.

– Creating a tweet campaign where tweets for something like my monthly webinars could be sent out on a regular sequence.

– Doing some similar with other social media networks such as Linkedin, Facebook, etc.

– Have blog posts I create be automatically be added to this recurring schedule.

– etc, etc. This potential is enormous.

Hopefully, this will inspire you to take a closer look at Microsoft Flow and see what it can do to automate and streamline your business. I’m going to continue playing with Microsoft Flow but also get into Azure Functions, Azure Logic Apps, Azure Run books and more as I want to automate as much as I can.

Software will eat the world!