Introduction and Background

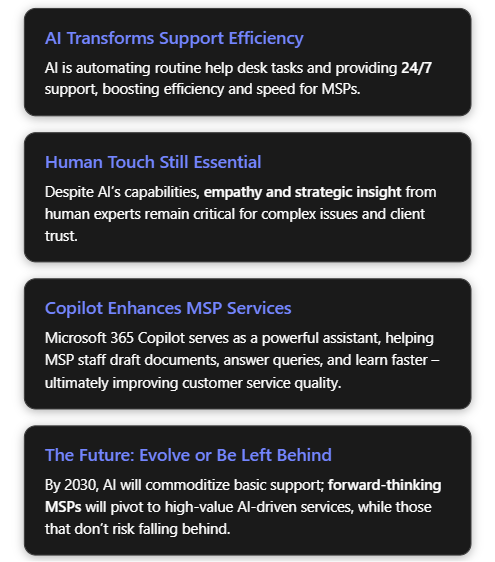

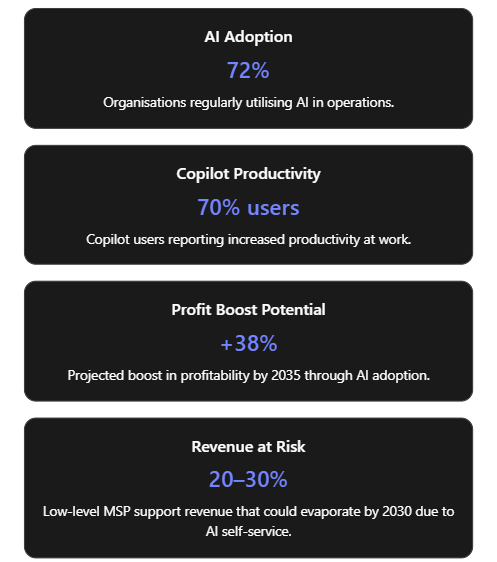

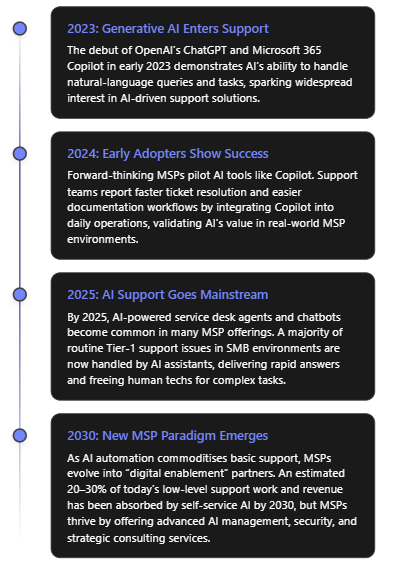

Managed Service Providers (MSPs) serving small-to-medium businesses (SMBs) typically operate help desks that handle IT support requests, from password resets to system troubleshooting. Traditionally, these support desks rely on human technicians available only during business hours, which can mean delays and higher costs. Today, artificial intelligence (AI) is revolutionising this model by introducing intelligent automation and chat-based agents that can work tirelessly around the clock[1][1]. AI-driven service desks leverage machine learning and natural language processing to handle routine tasks (like password resets or basic user queries) with minimal human intervention[1]. This transformation is happening rapidly: as of mid-2020s, an estimated 72% of organisations are regularly utilising AI technologies in their operations[2]. The surge of generative AI (exemplified by OpenAI’s ChatGPT and Microsoft’s Copilot) has shown how AI can converse with users, analyse large data context, and generate content, making it extremely relevant to customer support scenarios.

Microsoft 365 Copilot is one high-profile example of this AI wave. Introduced in early 2023 as an AI assistant across Microsoft’s productivity apps[3], Copilot combines large language models with an organisation’s own data through Microsoft Graph. For MSPs, tools like Copilot represent an opportunity to augment their help desk teams with AI capabilities within the familiar Microsoft 365 environment, ensuring data remains secure and context-specific[4]. In the following sections, we examine the positive and negative impacts of AI on SMB-focused MSP help desks, explore how MSPs can utilise Microsoft 365 Copilot to enhance service delivery, and project the long-term changes AI may bring to MSP support operations.

Positive Impacts of AI on MSP Help Desks

AI is bringing a multitude of benefits to help desk operations for MSPs, especially those serving SMB clients. Below are some of the most significant advantages, with examples:

- 24/7 Availability and Faster Response: AI-powered virtual agents (chatbots or voice assistants) can handle inquiries at any time, providing immediate responses even outside normal working hours. This round-the-clock coverage ensures no customer request has to wait until the next business day, significantly reducing response times[1]. For example, an AI service desk chatbot can instantly address a password reset request at midnight, whereas a human technician might not see it until morning. The result is improved customer satisfaction due to swift, always-on support[1][1].

- Automation of Routine Tasks: AI excels at handling repetitive, well-defined tasks, which frees up human technicians for more complex issues. Tasks like password resets, account unlocks, software installations, and ticket categorisation can be largely automated. An AI service desk can use chatbots with natural language understanding to guide users through common troubleshooting steps and resolve simple requests without human intervention[1][1]. One industry report notes that AI-driven chatbots are now capable of resolving many Level-1 support issues (e.g. password resets or printer glitches) on their own[5]. This automation not only reduces the workload on human staff but also lowers operational costs (since fewer manual labour hours are spent on low-value tasks)[1].

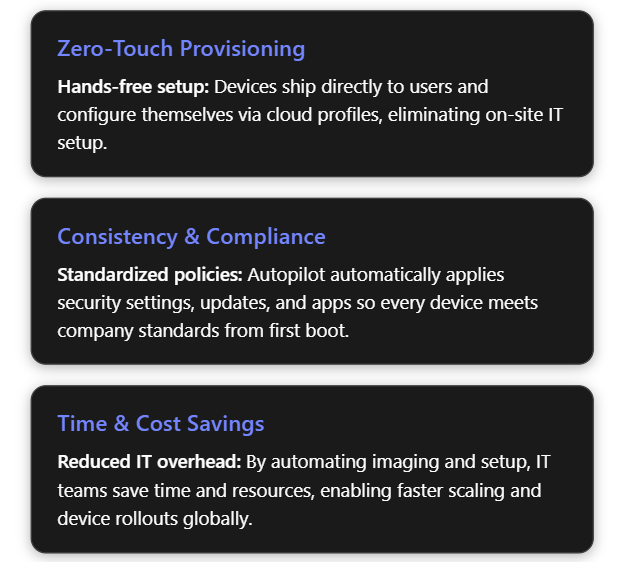

- Improved Efficiency and Cost Reduction: By automating the mundane tasks and expediting issue resolution, AI can dramatically increase the efficiency of help desk operations. Routine incidents get resolved faster, and more tickets can be handled concurrently. This efficiency translates to cost savings – MSPs can support more customers without a linear increase in headcount. A 2025 analysis of IT service management tools indicates that incorporating AI (for example, using machine learning to categorise tickets or recommend solutions) can save hundreds of man-hours each month for an MSP’s service team[6][6]. These savings come from faster ticket handling and fewer repetitive manual interventions. In fact, AI’s contribution to productivity is so significant that an Accenture study projected AI technologies could boost profitability in the IT sector by up to 38% by 2035[6], reflecting efficiency gains.

- Scalability of Support Operations: AI allows MSP help desks to scale up support capacity quickly without a proportional increase in staff. Because AI agents can handle multiple queries simultaneously and don’t tire, MSPs can on-board new clients or handle surge periods (such as a major incident affecting many users at once) more easily[1]. For instance, if dozens of customers report an email outage at the same time, an AI system could handle all incoming queries in parallel – something a limited human team would struggle with. This scalability ensures service quality remains high even as the customer base grows or during peak demand.

- Consistency and Knowledge Retention: AI tools provide consistent answers based on the knowledge they’ve been trained on. They don’t forget procedures or skip troubleshooting steps, which means more uniform service quality. If an AI is integrated with a knowledge base, it will tap the same repository of solutions every time, leading to standardized resolutions. Moreover, modern AI agents can maintain context across a conversation and even across sessions. By 2025, advanced AI service desk agents were capable of keeping track of past interactions with a client, so the customer doesn’t have to repeat information if they come back with a related issue[7]. This contextual continuity makes support interactions smoother and more personalized, even when handled by AI.

- Proactive Issue Resolution: AI’s predictive analytics capabilities enable proactive support rather than just reactive. Machine learning models can analyze patterns in system logs and past tickets to predict incidents before they occur. For example, AI can flag that a server’s behavior is trending towards failure or that a certain user’s laptop hard drive shows signs of impending crash, prompting preemptive maintenance. MSPs are leveraging AI to perform predictive health checks – e.g. automatically identifying anomaly patterns that precede network outages or using predictive models to schedule patches at optimal times before any disruption[6][7]. This results in fewer incidents for the help desk to deal with and reduced downtime for customers. AI can also intelligently prioritize tickets that are at risk of violating SLA (service level agreement) times by learning from historical data[6], ensuring critical issues get speedy attention.

- Enhanced Customer Experience and Personalisation: Counterintuitively, AI can help deliver a more personalized support experience for clients. By analysing customer data and past interactions, AI systems can tailor responses or suggest solutions that are particularly relevant to that client’s history and environment[7]. For example, an AI might recognize that a certain client frequently has issues with their email system and proactively suggest steps or upgrades to preempt those issues. AI chatbots can also dynamically adjust their language tone and complexity to match the user’s skill level or emotional state. Some advanced service desk AI can detect sentiment – if a user sounds frustrated, the system can route the conversation to a human or respond in a more empathetic tone automatically[1][1]. Multilingual support is another boon: AI agents can fluently support multiple languages, enabling an MSP to serve diverse or global customers without needing native speakers of every language on staff[7]. All these features drive up customer satisfaction, as clients feel their needs are anticipated and understood. Surveys have shown faster service and 24/7 availability via AI lead to higher customer happiness ratings on support interactions[1].

- Allowing Human Focus on Complex Tasks: Perhaps the most important benefit is that by offloading simple queries to AI, human support engineers have more bandwidth for complex problem-solving and value-added work. Rather than spending all day on password resets and setting up new accounts, the human team members can focus on high-priority incidents, strategic planning for clients, or learning new technologies. MSP technicians can devote attention to issues that truly require human creativity and expertise (like diagnosing novel problems or providing consulting advice to improve a client’s infrastructure) while the AI handles the “busy work.” This not only improves morale and utilisation of skilled engineers, but it also delivers better outcomes for customers when serious issues arise, because the team isn’t bogged down with minor tasks. As one service desk expert put it, with **AI handling Level-1 tickets, MSPs can redeploy their technicians to activities that more directly **“impact the business”, such as planning IT strategy or digital transformation initiatives for clients[6]. In other words, AI raises the ceiling of what the support team can achieve.

In summary, AI empowers SMB-focused MSPs to provide faster, more efficient, and more consistent support services to their customers. It reduces wait times, solves many problems instantly, and lets the human team shine where they are needed most. Many MSPs report that incorporating AI service desk tools has led to higher customer satisfaction and improved service quality due to these factors[1].

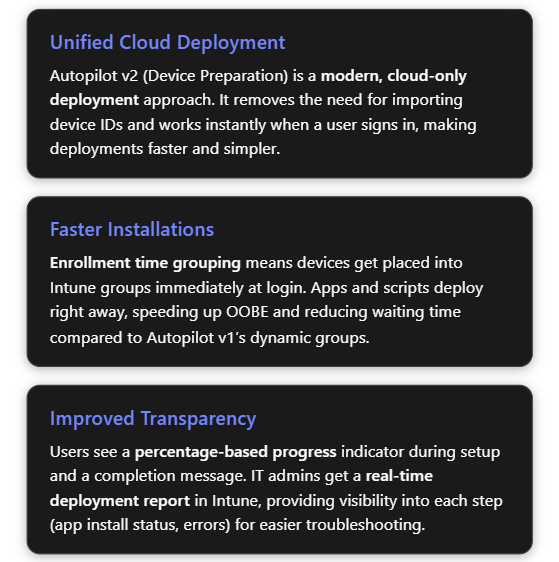

Challenges and Risks of AI in Help Desk Operations

Despite the clear advantages, the integration of AI into help desk operations is not without challenges. It’s important to acknowledge the potential drawbacks, risks, and limitations that come with relying on AI for customer support:

- Lack of Empathy and Human Touch: One of the most cited limitations of AI-based support is the absence of genuine empathy. AI lacks emotional intelligence – it cannot truly understand or share human feelings. While AI can be programmed to recognise certain keywords or even tone of voice indicating frustration, its responses may still feel canned or unempathetic. Customers dealing with stressful IT outages or complex problems often value a human who can listen and show understanding. An AI, no matter how advanced, may respond to an angry or anxious customer with overly formal or generic language, missing the mark in addressing the customer’s emotional state[7]. Over-reliance on AI chatbots can lead to customers feeling that the service is impersonal. For example, if a client is upset about recurring issues, an AI might continue to give factual solutions without acknowledging the client’s frustration, potentially aggravating the situation[7][7]. **In short, AI’s inability to *“read between the lines”* or pick up subtle cues can result in a poor customer experience in sensitive scenarios**[7].

- Handling of Complex or Novel Issues: AI systems are typically trained on existing data and known problem scenarios. They can struggle when faced with a completely new, unfamiliar problem, or one that requires creative thinking and multidisciplinary knowledge. A human technician might be able to use intuition or past analogies to tackle an odd issue, whereas an AI could be stumped if the problem doesn’t match its training data. Additionally, many complex support issues involve nuanced judgement calls – understanding business impact, making decisions with incomplete information, or balancing multiple factors. AI’s problem-solving is limited to patterns it has seen; it might give incorrect answers (or no answer) if confronted with ambiguity or a need for outside-the-box troubleshooting. This is related to the phenomenon of AI “hallucinations” in generative models, where an AI might produce a confident-sounding but completely incorrect solution if it doesn’t actually know the answer. Without human oversight, such errors could mislead customers. Thus, MSPs must be cautious: AI is a great first-line tool, but complex cases still demand human expertise and critical thinking[1].

- Impersonal Interaction & Client Relationship Concerns: While AI can simulate conversation, many clients can tell when they’re dealing with a bot versus a human. For longer-term client relationships (which are crucial in the MSP industry), solely interacting through AI might not build the personal rapport that comes from human interaction. Clients often appreciate knowing there’s a real person who understands their business on the other end. If an MSP over-automates the help desk, some clients might feel alienated or think the MSP is “just treating them like a ticket number.” As noted earlier, AI responses can be correct but impersonal, lacking the warmth or context a human would provide[7]. Over time, this could impact customer loyalty. MSPs thus need to strike a balance – using AI for efficiency while maintaining human touchpoints to nurture client relationships[7].

- Potential for Errors and Misinformation: AI systems are not infallible. They might misunderstand a user’s question (especially if phrased unconventionally), or access outdated/incomplete data, leading to wrong answers. If an AI-driven support agent gives an incorrect troubleshooting step, it could potentially make a problem worse (imagine an AI telling a user to run a wrong command that causes data loss). Without a human double-check, these errors could slip through. Moreover, advanced generative AI might sometimes fabricate plausible-sounding answers (hallucinations) that are entirely wrong. Ensuring the AI is thoroughly tested and paired with validation steps (or easy escalation to humans) is critical. Essentially, relying solely on AI without human oversight introduces a risk of incorrect solutions, which could harm customer trust or even violate compliance if the AI gives advice that doesn’t meet regulatory standards.

- Data Security and Privacy Risks: AI helpdesk implementations often require feeding customer data, system logs, and issue details into AI models. If not managed carefully, this raises privacy and security concerns. For example, sending sensitive information to an external AI service (like a cloud-based chatbot) could inadvertently expose that data. There have been cautionary tales – such as incidents where employees used public AI tools (e.g., ChatGPT) with confidential data and caused breaches of privacy[4][4]. MSPs must ensure that any AI they use is compliant with data protection regulations and that clients’ data is handled safely (encrypted in transit and at rest, access-controlled, and not retained or used for AI training without consent)[8][8]. Another aspect is ensuring the AI only has access to information it should. In Microsoft 365 Copilot’s case, it respects the organisation’s permission structure[4], but if an MSP used a more generic AI, they must guard against information bleed between clients. AI systems also need constant monitoring for unusual activities or potential vulnerabilities, as malicious actors might attempt to manipulate AI or exploit it to gain information[8][8]. In summary, introducing AI means MSPs have to double-down on cybersecurity and privacy audits around their support tools.

- Integration and Technical Compatibility Issues: Deploying AI into an existing MSP environment is not simply “plug-and-play.” Many MSPs manage a heterogeneous mix of client systems, some legacy and some modern. AI tools may struggle to integrate with older software or disparate platforms[7]. For instance, an AI that works great with cloud-based ticket data may not access information from a client’s on-premises legacy database without custom integration. Data might exist in silos (separate systems for ticketing, monitoring, knowledge base, etc.), and connecting all these for the AI to have a full picture can be challenging[7]. MSPs might need to invest significant effort to unify data sources or update infrastructure to be AI-ready. During integration, there could be temporary disruptions or a need to reconfigure workflows, which in the short term can hamper productivity or confuse support staff[7][7]. For smaller MSPs, lacking in-house AI/ML expertise, integrating and maintaining an AI solution can be a notable hurdle, potentially requiring new hires or partnerships.

- Over-reliance and Skill Erosion: There is a softer risk as well: if an organisation leans too heavily on AI, their human team might lose opportunities to practice and sharpen their own skills on simpler issues. New support technicians often “learn the ropes” by handling common Level-1 problems and gradually taking on more complex ones. If AI takes all the easy tickets, junior staff might not develop a breadth of experience, which could slow their growth. Additionally, there’s the strategic risk of over-relying on AI for decision-making. AI can provide data-driven recommendations, but it doesn’t understand business strategy or ethics at a high level[7][7]. MSP managers must be careful not to substitute AI outputs for their own judgement, especially in decisions about how to service clients or allocate resources. Important decisions still require human insight – AI might suggest a purely cost-efficient solution, but a human leader will consider client relationships, long-term implications, and ethical aspects that AI would miss[7][7].

- Customer Pushback and Change Management: Finally, some end-users simply prefer human interaction. If an MSP suddenly routes all calls to a bot, some customers might react negatively, perceiving it as a downgrade in service quality. There can be a transition period where customers need to be educated on how to use the new AI chatbot or voice menu. Ensuring a smooth handoff to a human agent on request is vital to avoid frustration. MSPs have to manage this change carefully, communicating the benefits of the new system (such as faster answers) while assuring clients that humans are still in the loop and reachable when needed.

In essence, while AI brings remarkable capabilities to help desks, it is not a panacea. The human element remains crucial: to provide empathy, handle exceptions, verify AI outputs, and maintain strategic oversight[7][7]. Many experts stress that the optimal model is a hybrid approach – AI and humans working together, where AI handles the heavy lifting but humans guide the overall service and step in for the nuanced parts[7][7]. MSPs venturing into AI-powered support must invest in training their staff to work alongside AI, update processes for quality control, and maintain open channels for customers to reach real people when necessary. Striking the right balance will mitigate the risks and ensure AI augments rather than alienates.

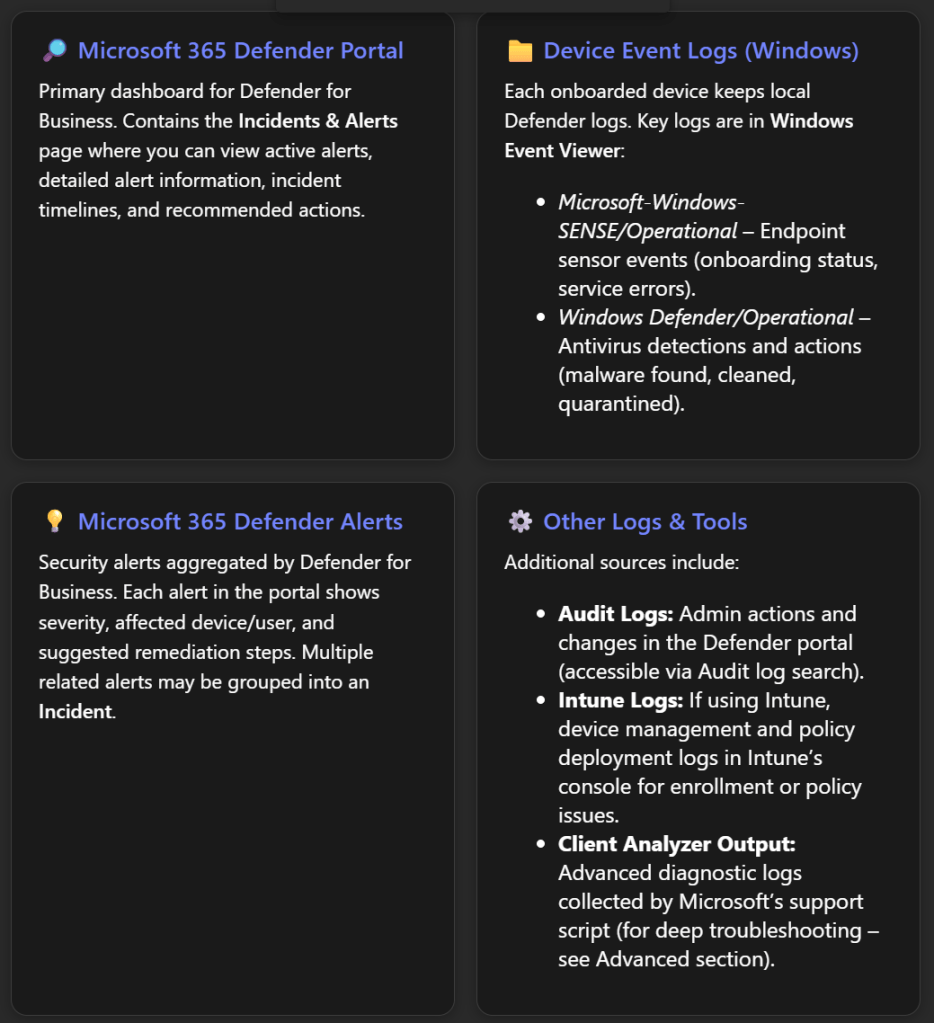

To summarise the trade-offs, the table below contrasts AI service desks with traditional human support on key factors:

| Aspect | AI Service Desk | Human Helpdesk Agent |

|---|---|---|

| Response Time | Instant responses to queries[1] | Varies based on availability (can be minutes to hours)[1] |

| Availability | 24/7 continuous operation[1] | Limited to business/support hours[1] |

| Consistency/Accuracy | High on well-known issues (follows predefined solutions exactly)[1] | Strong on complex troubleshooting; can adapt when a known solution fails[1] |

| Personalisation & Empathy | Limited emotional understanding; responses feel robotic if issue is nuanced[1] | Natural empathy and personal touch; can adjust tone and approach to the individual[1] |

| Scalability | Easily handles many simultaneous requests (no queue for simple issues)[1] | Scalability limited by team size; multiple requests can strain capacity |

| Cost | Lower marginal cost per ticket (after implementation)[1] | Higher ongoing cost (salaries, training for staff)[1] |

Table: AI vs Human Support – Both have strengths; best results often come from combining them.

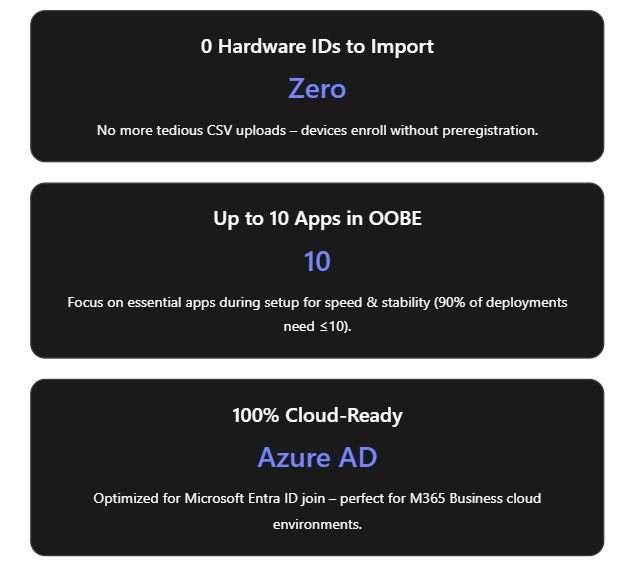

Using Microsoft 365 Copilot in an SMB MSP Environment

Microsoft 365 Copilot is a cutting-edge AI assistant that MSPs can leverage internally to enhance help desk and support operations. Copilot integrates with tools like Teams, Outlook, Word, PowerPoint, and more – common applications that MSP staff use daily – and supercharges them with AI capabilities. Here are several ways an SMB-focused MSP can use M365 Copilot to take advantage of AI and provide better customer service:

- Real-time assistance during support calls (Teams Copilot): Copilot in Microsoft Teams can act as a real-time aide for support engineers. For example, during a live call or chat with a customer, a support agent can ask Copilot in Teams contextual questions to get information or troubleshooting steps without leaving the conversation. One MSP Head-of-Support shared that “Copilot in Teams can answer specific questions about a call with a user… providing relevant information and suggestions during or after the call”, saving the team time they’d otherwise spend searching manuals or past tickets[9]. The agent can even ask Copilot to summarize what was discussed in a meeting or call, and it will pull the key details for reference. This means the technician stays focused on the customer instead of frantically flipping through knowledge base articles. The information Copilot provides can be directly added to ticket notes, making documentation faster and more accurate[9]. Ultimately, this leads to quicker resolutions and more thorough records of what was done to fix an issue.

- Faster documentation and knowledge base creation (Word Copilot): Documentation is a big part of MSP support – writing up how-to guides, knowledge base articles, and incident reports. Copilot in Word helps by drafting and editing documentation alongside the engineer. Support staff can simply prompt Copilot, e.g., “Draft a knowledge base article on how to connect to the new VPN,” and Copilot will generate a first draft by pulling relevant info from existing SharePoint files or previous emails[3][3]. In one use case, an MSP team uses Copilot to create and update technical docs like user guides and policy documents; it “helps us write faster, better, and more consistently, by suggesting improvements and corrections”[9]. Copilot ensures the writing is clear and grammatically sound, and it can even check for company-specific terminology consistency. It also speeds up reviews by highlighting errors or inconsistencies and proposing fixes[9]. The result is up-to-date documentation produced in a fraction of the time it used to take, which means customers and junior staff have access to current, high-quality guidance sooner.

- Streamlining employee training and support tutorials (PowerPoint Copilot): Training new support staff or educating end-users often involves creating presentations or guides. Copilot in PowerPoint can transform written instructions or outlines into slide decks complete with suggested images and formatting. An MSP support team described using Copilot in PowerPoint to auto-generate training slides for common troubleshooting procedures[9]. They would input the steps or a rough outline of resolving a certain issue, and Copilot would produce a coherent slide deck with graphics, which they could then fine-tune. Copilot even fetches appropriate stock images based on content to make slides more engaging[9], eliminating the need to manually search for visuals. This capability lets the MSP rapidly produce professional training materials or client-facing “how-to” guides. For example, after deploying a new software for a client, the MSP could quickly whip up an end-user training presentation with Copilot’s help, ensuring the client’s staff can get up to speed faster.

- Accelerating research and problem-solving (Edge Copilot): Often, support engineers need to research unfamiliar problems or learn about a new technology. Copilot in Microsoft Edge (the browser) can serve as a research assistant by providing contextual web answers and learning resources. Instead of doing a generic web search and sifting through results, a tech can ask Copilot in Edge something like, “How do I resolve error code X in Windows 11?” and get a distilled answer or relevant documentation links right away[9]. Copilot in Edge was noted to “provide the most relevant and reliable information from trusted sources…almost replacing Google search” for one MSP’s technical team[9]. It can also suggest useful tutorials or forums to visit for deeper learning. This reduces the time spent hunting for solutions online and helps the support team solve issues faster. It’s especially useful for SMB MSPs who cover a broad range of technologies with lean teams – Copilot extends their knowledge by quickly tapping into the vast information on the web.

- Enhancing customer communications (Outlook Copilot & Teams Chat): Communications with customers – whether updates on an issue, reports, or even drafting an outage notification – can be improved with Copilot. In Outlook, Copilot can summarize long email threads and draft responses. Imagine an MSP engineer inherits a complex email chain about a persistent problem; Copilot can summarize what has been discussed, highlight the different viewpoints or concerns from each person, and even point out unanswered questions[3]. This allows the engineer to grasp the situation quickly without reading every email in detail. Then, the engineer can ask Copilot to draft a reply email that addresses those points – for instance, “write a response thanking the client for their patience and summarizing the next steps we will take to fix the issue.” Copilot will generate a polished, professional email in seconds, which the engineer can review and send[3]. This is a huge time-saver and ensures communication is clear and well-formulated. In Microsoft Teams chats, Business Chat (Copilot Chat) can pull together data from multiple sources to answer a question or produce an update. An MSP manager could ask, “Copilot, generate a brief status update for Client X’s network outage yesterday,” and it could gather info from the technician’s notes, the outage Teams thread, and the incident ticket to produce a cohesive update message for the client. By using Copilot for these tasks, MSPs can respond to clients more quickly and with well-structured communications, improving professionalism and client confidence in the support they receive[3][3].

- Knowledge integration and context: Because Microsoft 365 Copilot works within the MSP’s tenant and on top of its data (documents, emails, calendars, tickets, etc.), it can connect dots that might be missed otherwise. For example, if a customer asks, “Have we dealt with this printer issue before?”, an engineer could query Business Chat, which might pull evidence from a past meeting note, a SharePoint document with troubleshooting steps, and a previous ticket log, all summarized in one answer[3][3]. This kind of integrated insight is incredibly valuable for institutional knowledge – the MSP effectively gains an AI that knows all the past projects and can surface the right info on demand. It means faster resolution and demonstrating to customers that “institutional memory” (even as staff come and go) is retained.

Overall, Microsoft 365 Copilot acts as a force-multiplier for MSP support teams. It doesn’t replace the engineers, but rather augments their abilities – handling the grunt work of drafting, searching, and summarising so that the human experts can focus on decision-making and problem-solving. By using Copilot internally, an MSP can deliver answers and solutions to customers more quickly, with communications that are well-crafted and documentation that is up-to-date. It also helps train and onboard new team members, as Copilot can quickly bring them up to speed on procedures and past knowledge.

From the customer’s perspective, the use of Copilot by their MSP translates to better service: faster turnaround on tickets, more thorough documentation provided for solutions, and generally a more proactive support approach. For example, customers might start receiving helpful self-service guides or troubleshooting steps that the MSP created in half the time using Copilot – so issues get resolved with fewer back-and-forth interactions.

It’s important to note that Copilot operates within the Microsoft 365 security and compliance framework, meaning data stays within the tenant’s boundaries. This addresses some of the privacy concerns of using AI in support. Unlike generic AI tools, Copilot will only show content that the MSP and its users have permission to access[4]. This feature is crucial when dealing with multiple client data sets and sensitive information; it ensures that leveraging AI does not inadvertently leak information between contexts.

In conclusion, adopting Microsoft 365 Copilot allows an SMB MSP to ride the AI wave in a controlled, enterprise-friendly manner. It directly boosts the productivity of the support team and helps standardise best practices across the organisation. As AI becomes a bigger part of daily work, tools like Copilot give MSPs a head start in using these capabilities to benefit their customers, without having to build an AI from scratch.

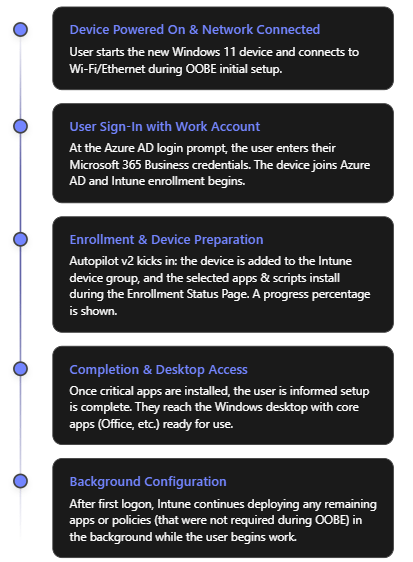

Long-Term Outlook: The Future of MSP Support in the AI Era

Looking ahead, the influence of AI on MSP-provided support is only expected to grow. Industry observers predict significant changes in how MSPs operate over the next 5–10 years as AI technologies mature. Here are some key projections for the longer-term impact of AI on MSPs and their help desks:

- Commoditisation of Basic Services: Over the long term, many basic IT support services are likely to become commoditised or bundled into software. For instance, routine monitoring, patch management, and straightforward troubleshooting might be almost entirely automated by AI systems. Microsoft and other vendors are increasingly building AI “co-pilots” directly into their products (as indicated by features rolling out in tools by 2025), allowing end-users to self-serve solutions that once required an MSP’s intervention[5][5]. As a result, MSPs may find that the traditional revenue from things like alert monitoring or simple ticket resolution diminishes. In fact, experts predict that by 2030, about a quarter of the current low-complexity ticket volume will vanish – either resolved automatically by AI or handled by intuitive user-facing AI assistants[5]. This means MSPs must prepare for possible pressure on the classic “all-you-can-eat” support contracts, as clients question paying for tasks that AI can do cheaply[5]. We may see pricing models shift from per-seat or per-ticket to outcome-based agreements where the focus is on uptime and results (with AI silently doing much of the work in the background)[5].

- New High-Value Services and Roles: On the flip side, AI will open entirely new service opportunities for MSPs who adapt. Just as some revenue streams shrink, others will grow or emerge. Key areas poised for expansion include:

- AI Oversight and Management: Businesses will need help deploying, tuning, and governing AI systems. MSPs can provide services like training AI on custom data, monitoring AI performance, and ensuring compliance (preventing biased outcomes or data leakage). One new role mentioned is managing “prompt engineering” and data quality to avoid AI errors like hallucinations[5]. MSPs could bundle services to regularly check AI outputs, update the knowledge base the AI draws from, and keep the AI models secure and up-to-date.

- AI-Enhanced Security Services: The cybersecurity landscape is escalating as both attackers and defenders leverage AI. MSPs can develop AI-driven security operation center (SOC) services, using advanced AI to detect anomalies and respond to threats faster than any human could[5]. Conversely, they must counter AI-empowered cyber attacks. This arms race creates demand for MSP-led managed security services (like “MDR 2.0” – Managed Detection & Response with AI) that incorporate AI tools to protect clients[5]. Many MSPs are already exploring such offerings as a higher-margin, value-add service.

- Strategic AI Consulting: As AI pervades business processes, clients (especially SMBs) will turn to their MSPs for guidance on how to integrate AI into their operations. MSPs can evolve into consultants for AI adoption, advising on the right AI tools, data strategies, and process changes for each client. They might conduct AI readiness assessments and help implement AI in areas beyond IT support – such as in analytics or workflow automation – effectively becoming a “virtual CIO for AI” for small businesses[5][5].

- Data Engineering and Integration: With AI’s hunger for data, MSPs might offer services to clean, organise, and integrate client data so that AI systems perform well. For instance, consolidating a client’s disparate databases and migrating data to cloud platforms where AI can access it. This ensures the client’s AI (or Copilot-like systems) have high-quality data to work with, improving outcomes[2]. It’s a natural extension of the MSP’s role in managing infrastructure and could become a significant service line (data pipelines, data lakes, etc., managed for SMBs).

- Industry-specific AI Solutions: MSPs might develop expertise in specific verticals (e.g., healthcare, legal, manufacturing) and provide custom AI solutions tuned to those domains[5]. For example, an MSP could offer an AI toolset for medical offices that assists with compliance (HIPAA) and automates patient IT support with knowledge of healthcare workflows. These niche AI services could command premium prices and differentiate MSPs in the market.

- Evolution of MSP Workforce Skills: The skill profile of MSP staff will evolve. The level-1 help desk role may largely transform into an AI-supported custodian role, where instead of manually doing the work, the technician monitors AI outputs and handles exceptions. There will be greater demand for skills in AI and data analytics. We’ll see MSPs investing in training their people on AI administration, scripting/automation, and interpreting AI-driven insights. Some positions might shift from pure technical troubleshooting to roles like “Automation Specialist” or “AI Systems Analyst.” At the same time, soft skills (like client relationship management) become even more important for humans since they’ll often be stepping in primarily for the complex or sensitive interactions. MSPs that encourage their staff to upskill in AI will stay ahead. As one playbook suggests, MSPs should “upskill NOC engineers in Python, MLOps, and prompt-engineering” to thrive in the coming years[5].

- Business Model and Competitive Landscape Changes: AI may lower the barrier for some IT services, meaning MSPs face new competition (for example, a product vendor might bundle AI support directly, or a client might rely on a generic AI service instead of calling the MSP for minor issues). To stay competitive, MSPs will likely transition from being pure “IT fixers” to become more like a partner in continuous improvement for clients’ technology. Contracts might include AI as part of the service – for example, MSPs offering a proprietary AI helpdesk portal to clients as a selling point. The overall managed services market might actually expand as even very small businesses can afford AI-augmented support (increasing the TAM – total addressable market)[5]. Rather than needing a large IT team, a five-person company could engage an MSP that uses AI to give them enterprise-grade support experience. So there’s a scenario where AI helps MSPs scale down-market to micro businesses and also up-market by handling more endpoints per engineer than before. Analysts foresee that MSPs could morph into “Managed Digital Enablement Providers”, focusing not just on keeping the lights on, but on actively enabling new tech capabilities (like AI) for clients[5]. The MSPs who embrace this and market themselves as such will stand out.

- MSPs remain indispensable (if they adapt): A looming question is whether AI will eventually make MSPs obsolete, as some pessimists suggest. However, the consensus in the industry is that MSPs will continue to play a critical role, but it will be a changed role. AI is a tool – a powerful one – but it still requires configuration, oversight, and alignment with business goals. MSPs are perfectly positioned to fill that need for their clients. The human element – strategic planning, empathy, complex integration, and handling novel challenges – will keep MSPs relevant. In fact, AI could make MSPs more valuable by enabling them to deliver higher-level outcomes. Those MSPs that fail to incorporate AI may find themselves undercut on price and losing clients to more efficient competitors, akin to “the taxi fleet in the age of Uber” – still around but losing ground[5]. On the other hand, those that invest in AI capabilities can differentiate and potentially command higher margins (e.g., an MSP known for its advanced AI-based services can justify premium pricing and will be attractive to investors as well)[5]. Already, by 2025, MSP industry experts note that buyers looking to acquire or partner with MSPs ask about their AI adoption plan – no strategy often leads to a devaluation, whereas a clear AI roadmap is seen as a sign of an innovative, future-proof MSP[5][5].

In summary, the long-term impact of AI on MSP support is a shift in the MSP value proposition rather than a demise. Routine support chores will increasingly be handled by AI, which is “the new normal” of service delivery. Simultaneously, MSPs will gravitate towards roles of AI enablers, advisors, and security guardians for their clients. By embracing this evolution, MSPs can actually improve their service quality and deepen client relationships – using AI not as a competitor, but as a powerful ally. The MSP of the future might spend less time resetting passwords and more time advising a client’s executive team on technology strategy with AI-generated insights. Those who adapt early will likely lead the market, while those slow to change may struggle.

Ultimately, AI is a force-multiplier, not a wholesale replacement for managed services[5]. The most successful MSPs will be the ones that figure out how to blend AI with human expertise, providing a seamless, efficient service that still feels personal and trustworthy. As we move toward 2030 and beyond, an MSP’s ability to harness AI – for their own operations and for their clients’ benefit – will be a key determinant of their success in the industry.

References

[1] AI Service Desk: Advantages, Risks and Creative Usages

[2] How MSPs Can Help Organizations Adopt M365 Copilot & AI

[3] Introducing Copilot for Microsoft 365 | Microsoft 365 Blog

[4] The Practical MSP Guide to Microsoft 365 Copilot

[5] AI & Agentic AI in Managed Services: Threat or Catalyst?

[6] How AI help MSPs increase their bottom line in 2025 – ManageEngine

[7] What AI Gets Right (and Wrong) About Running an MSP in 2025 and Beyond

[8] Exploring the Risks of Generative AI in IT Helpdesks: Mitigating Risks

[9] How Copilot for Microsoft 365 Enhances Service Desk Efficiency: Alex’s …