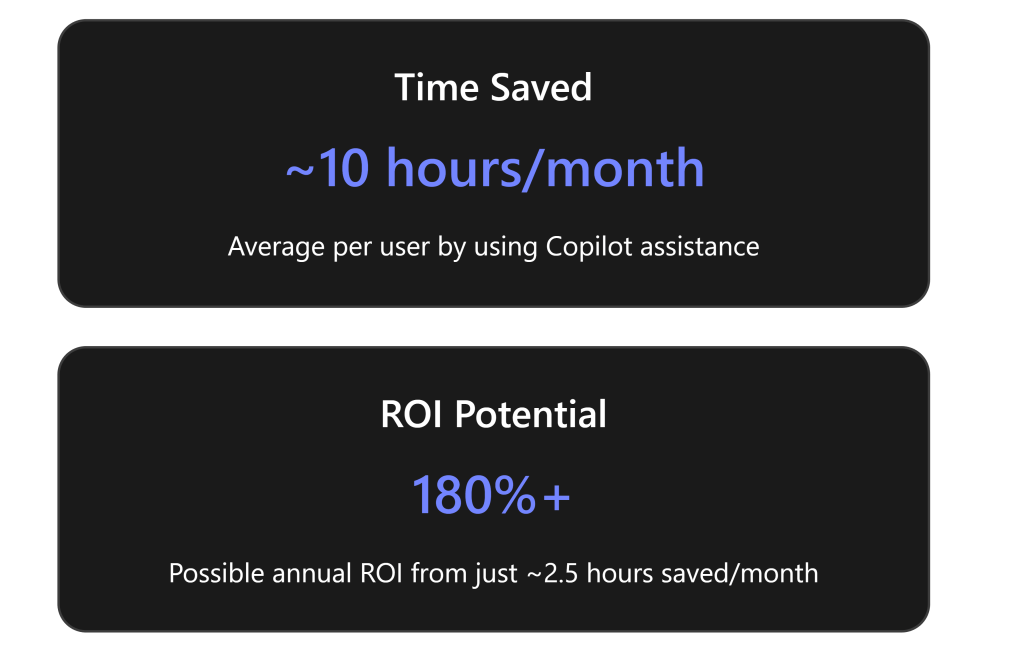

Executive Summary

The deployment of Copilot Studio agents within Microsoft Teams introduces a nuanced dynamic concerning data access and response completeness, particularly when interacting with users holding varying Microsoft 365 Copilot licenses. This report provides a comprehensive analysis of these interactions, focusing on the differential access to work data and the agent’s notification behavior regarding partial answers.

A primary finding is that a user possessing a Microsoft 365 Copilot license will indeed receive more comprehensive and contextually relevant responses from a Copilot Studio agent. This enhanced completeness is directly attributable to Microsoft 365 Copilot’s inherent capability to leverage the Microsoft Graph, enabling access to a user’s authorized organizational data, including content from SharePoint, OneDrive, and Exchange.1 Conversely, users without this license will experience limitations in accessing such personalized work data, resulting in responses that are less complete, more generic, or exclusively derived from publicly available information or pre-defined knowledge sources.3

A critical observation is that Copilot Studio agents are not designed to explicitly notify users when a response is partial or incomplete due to licensing constraints or insufficient data access permissions. Instead, the agent’s operational model involves silently omitting any content from knowledge sources that the querying user is not authorized to access.4 In situations where the agent cannot retrieve pertinent information, it typically defaults to generic fallback messages, such as “I’m sorry. I’m not sure how to help with that. Can you try rephrasing?”.5 This absence of explicit, context-specific notification poses a notable challenge for managing user expectations and ensuring a transparent user experience.

Furthermore, while it is technically feasible to make Copilot Studio agents accessible to users without a full Microsoft 365 Copilot license, interactions that involve accessing shared tenant data (e.g., content from SharePoint or via Copilot connectors) will incur metered consumption charges. These charges are typically billed through Copilot Studio’s pay-as-you-go model.3 In stark contrast, users with a Microsoft 365 Copilot license benefit from “zero-rated usage” for these types of interactions when conducted within Microsoft 365 services, eliminating additional costs for accessing internal organizational data.6 These findings underscore the importance of strategic licensing, robust governance, and clear user communication for effective AI agent deployment.

Introduction

The integration of artificial intelligence (AI) agents into enterprise workflows is rapidly transforming how organizations operate, particularly within collaborative platforms like Microsoft Teams. Platforms such as Microsoft Copilot Studio empower businesses to develop and deploy intelligent conversational agents that enhance employee productivity, streamline information retrieval, and automate routine tasks. As these AI capabilities become increasingly central to organizational efficiency, a thorough understanding of their operational characteristics, especially concerning data interaction and user experience, becomes paramount.

This report is specifically designed to provide a definitive and comprehensive analysis of how Copilot Studio agents behave when deployed within Microsoft Teams. The central inquiry revolves around the impact of varying Microsoft 365 Copilot licensing statuses on an agent’s ability to access and utilize enterprise work data. A key objective is to clarify whether a licensed user receives a more complete response compared to a non-licensed user and, crucially, if the agent provides any notification when a response is partial due to data access limitations. This detailed examination aims to equip IT administrators and decision-makers with the necessary insights for strategic planning, deployment, and governance of AI solutions within their enterprise environments.

Understanding Copilot Studio Agents and Data Grounding

Microsoft Copilot Studio is a robust, low-code graphical tool engineered for the creation of sophisticated conversational AI agents and their underlying automated processes, known as agent flows.7 These agents are highly adaptable, capable of interacting with users across numerous digital channels, with Microsoft Teams being a prominent deployment environment.7 Beyond simple question-and-answer functionalities, these agents can be configured to execute complex tasks, address common organizational inquiries, and significantly enhance productivity by integrating with diverse data sources. This integration is facilitated through a range of prebuilt connectors or custom plugins, allowing for tailored access to specific datasets.7 A notable capability of Copilot Studio agents is their ability to extend the functionalities of Microsoft 365 Copilot, enabling the delivery of customized responses and actions that are deeply rooted in specific enterprise data and scenarios.7

How Agents Access Data: The Principle of User-Based Permissions and the Role of Microsoft Graph

A fundamental principle governing how Copilot agents, including those developed within Copilot Studio and deployed through Microsoft 365 Copilot, access information is their strict adherence to the end-user’s existing permissions. This means that the agent operates within the security context of the individual user who is interacting with it.4 Consequently, the agent will only retrieve and present data that the user initiating the query is explicitly authorized to access.1 This design choice is a deliberate architectural decision to embed security and data privacy at the core of the Copilot framework, ensuring that the system is engineered to prevent unauthorized data access by design, leveraging existing Microsoft 365 security models. This robust, security-by-design approach significantly mitigates the critical risk of unintended data exfiltration, a paramount concern for enterprises adopting AI solutions. For IT administrators, this implies a reliance on established Microsoft 365 permission structures for data security when deploying Copilot Studio agents, rather than needing to implement entirely new, AI-specific permission layers for content accessed via the Microsoft Graph. This establishes a strong foundation of trust in the platform’s ability to handle sensitive organizational data.

Microsoft 365 Copilot achieves this secure data grounding by leveraging the Microsoft Graph, which acts as the gateway to a user’s personalized work data. This encompasses a broad spectrum of information, including emails, chat histories, and documents stored within the Microsoft 365 ecosystem.1 This grounding mechanism ensures that organizational data boundaries, security protocols, compliance requirements, and privacy standards are meticulously preserved throughout the interaction.1 The agent respects the end user’s information and sensitivity privileges, meaning if the user lacks access to a particular knowledge source, the agent will not include content from it when generating a response.4

Distinction between Public/Web Data and Enterprise Work Data

Copilot Studio agents can be configured to draw knowledge from publicly available websites, serving as a broad knowledge base.10 When web search is enabled, the agent can fetch information from services like Bing, thereby enhancing the quality and breadth of responses grounded in public web content.11 This allows agents to provide general information or answers based on external, non-proprietary sources.

In contrast, enterprise work data, which includes sensitive and proprietary information residing in SharePoint, OneDrive, and Exchange, is accessed exclusively through the Microsoft Graph. Access to this internal data is strictly governed by the individual user’s explicit permissions, creating a clear delineation between publicly available information and internal organizational knowledge.1 This distinction is fundamental to understanding the varying levels of response completeness based on licensing. The agent’s ability to access and synthesize information from these disparate sources is contingent upon the user’s permissions and, as will be discussed, their specific Microsoft 365 Copilot licensing.

Impact of Microsoft 365 Copilot Licensing on Agent Responses

The licensing structure for Microsoft Copilot profoundly influences the depth and completeness of responses provided by Copilot Studio agents, particularly when those agents are designed to interact with an organization’s internal data.

Licensed User Experience: Comprehensive Access to Work Data

Users who possess a Microsoft 365 Copilot license gain access to a fully integrated AI-powered productivity tool. This tool seamlessly combines large language models with the user’s existing data within the Microsoft Graph and across various Microsoft 365 applications, including Word, Excel, PowerPoint, Outlook, and Teams.1 This deep integration is the cornerstone for delivering highly personalized and comprehensive responses, directly grounded in the user’s work emails, chat histories, and documents.1 The system is designed to provide real-time intelligent assistance, enhancing creativity, productivity, and skills.9

Furthermore, the Microsoft 365 Copilot license encompasses the usage rights for agents developed in Copilot Studio when deployed within Microsoft 365 products such as Microsoft Teams, SharePoint, and Microsoft 365 Copilot Chat. Crucially, interactions involving classic answers, generative answers, or tenant Microsoft Graph grounding for these licensed users are designated as “zero-rated usage”.6 This means that these specific types of interactions do not incur additional charges against Copilot Studio message meters or message packs. This comprehensive inclusion allows licensed users to fully harness the potential of these agents for retrieving information from their authorized internal data sources without incurring unexpected consumption costs. The Microsoft 365 Copilot license therefore functions not just as a feature unlocker but also as a significant cost-efficiency mechanism, particularly for high-frequency interactions with internal enterprise data. Organizations with a substantial user base expected to frequently interact with internal data via Copilot Studio agents should conduct a thorough Total Cost of Ownership (TCO) analysis, as the perceived higher per-user cost of a Microsoft 365 Copilot license might be strategically offset by avoiding unpredictable and potentially substantial pay-as-you-go charges.

Non-Licensed User Experience: Limitations in Accessing Work Data

Users who do not possess the Microsoft 365 Copilot add-on license will not benefit from the same deep, integrated access to their personalized work data via the Microsoft Graph. While these users may still be able to interact with Copilot Studio agents (particularly if the agent’s knowledge base relies on public information or pre-defined, non-Graph-dependent instructions), their capacity to receive responses comprehensively grounded in their specific enterprise work data is significantly restricted.3 This establishes a tiered system for data access within the Copilot ecosystem, where the richness and completeness of an agent’s response are directly linked to the user’s individual licensing status and their underlying data access rights within the organization.

A critical distinction arises for users who have an eligible Microsoft 365 subscription but lack the full Copilot add-on, often categorized as “Microsoft 365 Copilot Chat” users. If such a user interacts with an agent that accesses shared tenant data (e.g., content from SharePoint or through Copilot connectors), these interactions will trigger metered consumption charges, which are tracked via Copilot Studio meters.3 This transforms a functional limitation (less complete answers) into a direct financial consequence. The ability to access some internal data comes at a per-message cost. This means organizations must meticulously evaluate the financial implications of deploying agents to a mixed-license user base. If non-licensed users frequently query internal data via these agents, the cumulative pay-as-you-go (PAYG) charges could become substantial and unpredictable, making the “partial answer” scenario potentially a “costly answer” scenario.

Agents that exclusively draw information from instructions or public websites, however, do not incur these additional costs for any user.3 For individuals with no Copilot license or even a foundational Microsoft 365 subscription, access to Copilot features and its extensibility options, including agents leveraging M365 data, may not be guaranteed or might be entirely unavailable.3 A potential point of user experience friction arises because an agent might appear discoverable or “addable” within the Teams interface, creating an expectation of full functionality, even if the underlying licensing restricts its actual utility for that user.8 This discrepancy between apparent availability and actual capability can lead to significant user frustration and an increase in support requests.

The following table summarizes the comparative data access and cost implications across different license types:

Comparative Data Access and Cost by License Type

| License Type |

Access to Personalized Work Data (Microsoft Graph) |

Access to Shared Tenant Data (SharePoint, Connectors) |

Access to Public/Instruction-based Data |

Additional Usage Charges for Agent Interactions |

Response Completeness (Relative) |

| Microsoft 365 Copilot (Add-on) |

Comprehensive |

Comprehensive (Zero-rated) |

Yes |

No |

High (rich, contextually grounded) |

| Microsoft 365 Copilot Chat (Included w/ eligible M365) |

Limited/No |

Yes (Metered charges apply via Copilot Studio meters) |

Yes |

Yes (for shared tenant data interactions) |

Moderate (limited by work data access) |

| No Copilot License/No M365 Subscription |

No |

Not guaranteed/No |

Yes (if agent accessible) |

N/A (likely no access) |

Low (limited to public/instructional data) |

Agent Behavior Regarding Partial Answers and Notifications

A critical aspect of user experience with AI agents is how they communicate limitations or incompleteness in their responses. The analysis reveals specific behaviors of Copilot Studio agents in this regard.

Absence of Explicit Partial Answer Notifications

The available information consistently indicates that Copilot Studio agents are not designed to provide explicit notifications to users when a response is partial or incomplete due to the user’s lack of permissions to access underlying knowledge sources.4 Instead, the agent’s operational model dictates that it simply omits any content that the querying user is not authorized to access. This means the user receives a response that is, by design, incomplete from the perspective of the agent’s full knowledge base, but without any direct indication of this omission.

This design choice is a deliberate trade-off, prioritizing stringent data security and privacy protocols. It ensures that the agent never inadvertently reveals the existence of restricted information or the specific reason for its omission to an unauthorized user, thereby preventing potential information leakage or inference attacks. However, this creates a significant information asymmetry: end-users are left unaware of why an answer might be incomplete or why the agent could not fully address their query. They lack the context to understand if the limitation stems from a permission issue, a limitation of the agent’s knowledge, or a technical fault. This places a substantial burden on IT administrators and agent owners to proactively manage user expectations. Without transparent communication regarding the scope and limitations of agents for different user profiles, users may perceive the agent as unreliable, inconsistent, or broken, potentially leading to decreased adoption rates and an increase in support requests.

Generic Error Messages and Implicit Limitations

When a Copilot Studio agent encounters a scenario where it cannot fulfill a query comprehensively, whether due to inaccessible data, a lack of relevant information in its knowledge sources, or other technical issues, it typically defaults to generic, non-specific responses. A common example cited is “I’m sorry. I’m not sure how to help with that. Can you try rephrasing?”.5 Crucially, this message does not explicitly attribute the inability to provide a full answer to licensing limitations or specific data access permissions.

Other forms of service denial can manifest if the agent’s underlying capacity limits are reached. For instance, an agent might display a message stating, “This agent is currently unavailable. It has reached its usage limit. Please try again later”.12 While this is a clear notification of service unavailability, it pertains to a broader capacity issue rather than the specific scenario of partial data due to user permissions. When an agent responds with vague messages in situations where the underlying cause is a data access limitation, the actual reason for the failure remains opaque to the user. This effectively turns the agent’s decision-making and data retrieval process into a “black box” from the end-user’s perspective regarding data access. This lack of transparency directly hinders effective user interaction and self-service, as users cannot intelligently rephrase their questions, understand if they need a different license, or determine if they should seek information elsewhere.

Information for Makers/Admins vs. End-User Experience

Copilot Studio provides robust analytics capabilities designed for agent makers and administrators to monitor and assess agent performance.13 These analytics offer valuable insights into the quality of generative answers, capable of identifying responses that are “incomplete, irrelevant, or not fully grounded”.13 This diagnostic information is crucial for the continuous improvement of the agent.

However, a key distinction is that these analytics results are strictly confined to the administrative and development interfaces; “Users of agents don’t see analytics results; they’re available to agent makers and admins only”.13 This means that while administrators can discern

why an agent might be providing incomplete answers (e.g., due to data access issues), this critical diagnostic information is not conveyed to the end-user. This reinforces the need for clear guidance on what types of questions agents can answer for different user profiles and what data sources they are grounded in.

Licensing and Cost Implications for Agent Usage

Understanding the licensing models for Copilot Studio and Microsoft 365 Copilot is essential for managing the financial implications of deploying AI agents, especially in environments with diverse user licensing.

Overview of Copilot Studio Licensing Models

Microsoft Copilot Studio offers a flexible licensing framework comprising three primary models: Pay-as-you-go, Message Packs, and inclusion within the Microsoft 365 Copilot license.6 The Pay-as-you-go model provides highly flexible consumption-based billing at $0.01 per message, requiring no upfront commitment and allowing organizations to scale usage dynamically based on actual consumption.6 Alternatively, Message Packs offer a prepaid capacity, with a standard pack providing 25,000 messages per month for $200.6 For additional capacity beyond message packs, organizations are recommended to sign up for pay-as-you-go to ensure business continuity.6

Significantly, the Microsoft 365 Copilot license, an add-on priced at $30 per user per month, includes the usage rights for Copilot Studio agents when utilized within core Microsoft 365 products such as Teams, SharePoint, and Copilot Chat. Crucially, interactions involving classic answers, generative answers, or tenant Microsoft Graph grounding for these licensed users are “zero-rated,” meaning they do not consume from Copilot Studio message meters or incur additional charges.6 This provides a distinct cost advantage for organizations with a high number of Microsoft 365 Copilot licensed users.

It is important to differentiate between a Copilot Studio user license (which is free of charge) and the Microsoft 365 Copilot license. The free Copilot Studio user license is primarily for individuals who need access to create and manage agents.14 This does not imply free

consumption of agent responses for all users, particularly when those agents interact with enterprise data. This distinction is vital for IT administrators to communicate clearly within their organizations to prevent false expectations about “free” AI agent usage and potentially unexpected costs or functional limitations for end-users.

Discussion of Metered Charges for Non-Licensed Users Accessing Shared Tenant Data

While a dedicated Copilot Studio user license is primarily for authoring and managing agents 14 and not strictly required for interacting with a published agent, the user’s Microsoft 365 Copilot license status profoundly impacts the cost structure when the agent accesses shared tenant data.3 For users who possess an eligible Microsoft 365 subscription but do not have the Microsoft 365 Copilot add-on (i.e., those utilizing “Microsoft 365 Copilot Chat”), interactions with agents that retrieve information grounded in shared tenant data (such as SharePoint content or data via Copilot connectors) will trigger metered consumption charges. These charges are tracked and billed based on Copilot Studio meters.3 This is explicitly stated: “If people that the agent is shared with are not licensed with a Microsoft 365 Copilot license, they will start consuming on a PAYG subscription per message they receive from the agent”.8 Conversely, agents that rely exclusively on pre-defined instructions or publicly available website content do not incur these additional costs for any user, regardless of their Copilot license status.3

A significant governance concern arises when users share agents. If users share their agent with SharePoint content attached to it, the system may propose to “break the SharePoint permission on the assets attached and share the SharePoint resources directly with the audience group”.8 When combined with the metered PAYG model for non-licensed users accessing shared tenant data, this creates a potent dual risk. A well-meaning but uninformed user could inadvertently share an agent linked to sensitive internal data with a broad audience, potentially circumventing existing SharePoint permissions and exposing data, while simultaneously triggering unexpected and significant metered charges for those non-licensed users who then interact with the agent. This highlights a severe governance vulnerability, despite Microsoft’s statement that “security fears are gone” due to access inheritance.8 The acknowledgment of a “roadmap to address this security gap” 16 indicates that this remains an active area of concern for Microsoft.

Capacity Enforcement and Service Denial

Organizations must understand that Copilot Studio’s purchased capacity, particularly through message packs, is enforced on a monthly basis, and any unused messages do not roll over to the subsequent month.6 Should an organization’s actual usage exceed its purchased capacity, technical enforcement mechanisms will be triggered, which “might result in service denial”.6 This can manifest to the end-user as an agent becoming unavailable, accompanied by a message such as “This agent is currently unavailable. It has reached its usage limit. Please try again later”.12 This underscores the critical importance of proactive capacity management to ensure service continuity and avoid disruptions to user access.

The following table provides a detailed breakdown of Copilot Studio licensing and its associated usage cost implications:

| License Type |

Primary Purpose |

Cost Model |

Agent Usage of Personalized Work Data (Microsoft Graph) |

Agent Usage of Shared Tenant Data (SharePoint, Connectors) |

Agent Usage of Public/Instructional Data |

Capacity Enforcement |

Target User Type |

| Microsoft 365 Copilot (Add-on) |

Full M365 Integration & AI |

$30/user/month (add-on) |

Zero-rated |

Zero-rated (for licensed user’s interactions) |

Zero-rated |

N/A (unlimited for licensed features) |

Frequent users of M365 apps |

| Microsoft 365 Copilot Chat (Included w/ eligible M365) |

Web-based Copilot Chat & limited work data access |

Included with M365 subscription |

N/A |

Metered charges apply (via Copilot Studio meters) |

No extra charges |

N/A (unlimited for web, metered for work) |

Occasional Copilot users |

| Copilot Studio Message Packs |

Pre-purchased message capacity for agents |

$200/tenant/month (25,000 messages) |

Consumes message packs |

Consumes message packs |

Consumes message packs |

Monthly enforcement (unused don’t carry over) |

Broad internal/external agent users |

| Copilot Studio Pay-as-you-go |

On-demand message capacity for agents |

$0.01/message |

Consumes PAYG |

Consumes PAYG |

Consumes PAYG |

Monthly enforcement (based on actual usage) |

Flexible/scalable agent users |

Copilot Studio Licensing and Usage Cost Implications

Key Considerations for IT Administrators and Deployment

The complexities of licensing, data access, and agent behavior necessitate strategic planning and robust management by IT administrators to ensure successful deployment and optimal user experience.

Managing User Expectations Regarding Agent Capabilities Based on Licensing

Given the tiered data access model and the agent’s silent omission of inaccessible content, it is paramount for IT administrators to proactively and clearly communicate the precise capabilities and inherent limitations of Copilot Studio agents to different user groups, explicitly linking these to their licensing status. This communication strategy must encompass educating users on the types of questions agents can answer comprehensively (e.g., those based on public information or general, universally accessible company policies) versus those queries that necessitate a Microsoft 365 Copilot license for personalized, internal data grounding. Setting accurate expectations can significantly mitigate user frustration and enhance perceived agent utility.17

Strategies for Data Governance and Access Control for Copilot Studio Agents

It is crucial to continually reinforce and leverage the fundamental principle of user-based permissions for data access within the Copilot ecosystem.1 This means that existing security policies and permission structures within SharePoint, OneDrive, and the broader Microsoft Graph environment remain the authoritative control points. Organizations must implement and rigorously enforce Data Loss Prevention (DLP) policies within the Power Platform. These policies are vital for granularly controlling how Copilot Studio agents interact with external APIs and sensitive internal data.16 Administrators should also remain vigilant about the acknowledged “security gap” related to API plugins and monitor Microsoft’s roadmap for addressing these improvements.16

Careful management of agent sharing permissions is non-negotiable. Administrators must be acutely aware of the potential for agents to prompt users to “break permissions” on SharePoint content when sharing, which could inadvertently broaden data access beyond intended boundaries.4 Comprehensive training for agent creators on the implications of sharing agents linked to internal data sources is essential. Administrators possess granular control over agent availability and access within the Microsoft 365 admin center, allowing for precise deployment to “All users,” “No users,” or “Specific users or groups”.18 This administrative control point is critical for ensuring that agents are only discoverable and usable by their intended audience, aligning with organizational security policies.

Best Practices for Deploying Agents in Mixed-License Environments

To optimize agent deployment and user experience in environments with mixed licensing, several best practices are recommended:

- Purpose-Driven Agent Design: Design agents with a clear understanding of their intended audience and the data sources they will access. For broad deployment across a mixed-license user base, prioritize agents primarily grounded in public information, general company FAQs, or non-sensitive, universally accessible internal data. For agents requiring personalized work data access, specifically target their deployment to Microsoft 365 Copilot licensed users.

- Proactive Cost Monitoring: Establish robust mechanisms for actively monitoring Copilot Studio message consumption, particularly if non-licensed users are interacting with agents that access shared tenant data. This proactive monitoring is crucial for avoiding unexpected and potentially significant pay-as-you-go charges.6

- Comprehensive User Training and Education: Develop and deliver comprehensive training programs that clearly outline the capabilities and limitations of AI agents, the direct impact of licensing on data access, and what users can realistically expect from agent interactions based on their specific access levels. This proactive education is key to mitigating user frustration stemming from partial answers.

- Structured Admin Approval Workflows: Implement mandatory admin approval processes for the submission and deployment of all Copilot Studio agents, especially those configured to access internal organizational data. This ensures that agents are compliant with company policies, properly configured for data access, and thoroughly tested before broad release.17

- Strategic Environment Management: Consider establishing separate Power Platform environments within the tenant for different categories of agents (e.g., internal-facing vs. external-facing, or agents with varying levels of data sensitivity). This strategy enhances governance, simplifies access control, and helps prevent unintended data interactions across different use cases.8 It is also important to ensure that the “publish Copilots with AI features” setting is enabled for makers building agents with generative AI capabilities.16

Conclusion

This report confirms that Microsoft 365 Copilot licensing directly and significantly impacts the completeness and richness of responses provided by Copilot Studio agents, primarily by governing a user’s access to personalized work data via the Microsoft Graph. Licensed users benefit from comprehensive, contextually grounded answers, while non-licensed users face inherent limitations in accessing this internal data.

A critical finding is the absence of explicit notifications from Copilot Studio agents when a response is partial or incomplete due to licensing constraints or insufficient data access permissions. The agent employs a “silent omission” mechanism. While this approach benefits security by preventing unauthorized disclosure of data existence, it creates an information asymmetry for the end-user, who receives an incomplete answer without explanation.

Furthermore, the analysis reveals significant cost implications: interactions by non-licensed users with agents that access shared tenant data will incur metered consumption charges, contrasting sharply with the “zero-rated usage” for Microsoft 365 Copilot licensed users. This highlights that licensing directly affects not only functionality but also operational expenditure.

To optimize agent deployment and user experience, the following recommendations are provided:

- Proactive User Communication: Organizations must implement comprehensive communication strategies to clearly articulate the capabilities and limitations of AI agents based on user licensing. This includes setting realistic expectations for response completeness and data access to prevent frustration and build trust in the AI solutions.

- Robust Data Governance: It is imperative to strengthen existing data governance frameworks, including Data Loss Prevention (DLP) policies within the Power Platform, and to meticulously manage agent sharing controls. This proactive approach is crucial for mitigating security risks and controlling unexpected costs in environments with mixed license types.

- Strategic Licensing Evaluation: IT leaders should conduct a thorough total cost of ownership analysis to evaluate the long-term financial benefits of broader Microsoft 365 Copilot adoption for users who frequently require access to internal organizational data through AI agents. This analysis should weigh the upfront license costs against the unpredictable nature of pay-as-you-go charges that would otherwise accumulate.

- Continuous Monitoring and Refinement: Leverage Copilot Studio’s built-in analytics to continuously monitor agent performance, identify instances of incomplete or ungrounded responses, and use these observations to refine agent configurations, optimize knowledge sources, and further enhance user education.

Works cited

- What is Microsoft 365 Copilot? | Microsoft Learn, accessed on July 3, 2025, https://learn.microsoft.com/en-us/copilot/microsoft-365/microsoft-365-copilot-overview

- Retrieve grounding data using the Microsoft 365 Copilot Retrieval API, accessed on July 3, 2025, https://learn.microsoft.com/en-us/microsoft-365-copilot/extensibility/api-reference/copilotroot-retrieval

- Licensing and Cost Considerations for Copilot Extensibility Options …, accessed on July 3, 2025, https://learn.microsoft.com/en-us/microsoft-365-copilot/extensibility/cost-considerations

- Publish and Manage Copilot Studio Agent Builder Agents | Microsoft Learn, accessed on July 3, 2025, https://learn.microsoft.com/en-us/microsoft-365-copilot/extensibility/copilot-studio-agent-builder-publish

- Agent accessed via Teams not able to access Sharepoint : r/copilotstudio – Reddit, accessed on July 3, 2025, https://www.reddit.com/r/copilotstudio/comments/1l1gm82/agent_accessed_via_teams_not_able_to_access/

- Copilot Studio licensing – Learn Microsoft, accessed on July 3, 2025, https://learn.microsoft.com/en-us/microsoft-copilot-studio/billing-licensing

- Overview – Microsoft Copilot Studio | Microsoft Learn, accessed on July 3, 2025, https://learn.microsoft.com/en-us/microsoft-copilot-studio/fundamentals-what-is-copilot-studio

- Copilot agents on enterprise level : r/microsoft_365_copilot – Reddit, accessed on July 3, 2025, https://www.reddit.com/r/microsoft_365_copilot/comments/1l7du4v/copilot_agents_on_enterprise_level/

- Microsoft 365 Copilot – Service Descriptions, accessed on July 3, 2025, https://learn.microsoft.com/en-us/office365/servicedescriptions/office-365-platform-service-description/microsoft-365-copilot

- Quickstart: Create and deploy an agent – Microsoft Copilot Studio, accessed on July 3, 2025, https://learn.microsoft.com/en-us/microsoft-copilot-studio/fundamentals-get-started

- Data, privacy, and security for web search in Microsoft 365 Copilot and Microsoft 365 Copilot Chat | Microsoft Learn, accessed on July 3, 2025, https://learn.microsoft.com/en-us/copilot/microsoft-365/manage-public-web-access

- Understand error codes – Microsoft Copilot Studio, accessed on July 3, 2025, https://learn.microsoft.com/en-us/microsoft-copilot-studio/error-codes

- FAQ for analytics – Microsoft Copilot Studio, accessed on July 3, 2025, https://learn.microsoft.com/en-us/microsoft-copilot-studio/faqs-analytics

- Assign licenses and manage access to Copilot Studio – Learn Microsoft, accessed on July 3, 2025, https://learn.microsoft.com/en-us/microsoft-copilot-studio/requirements-licensing

- Access to agents in M365 Copilot Chat for all business users? : r/microsoft_365_copilot, accessed on July 3, 2025, https://www.reddit.com/r/microsoft_365_copilot/comments/1i3gu63/access_to_agents_in_m365_copilot_chat_for_all/

- A Microsoft 365 Administrator’s Beginner’s Guide to Copilot Studio, accessed on July 3, 2025, https://practical365.com/copilot-studio-beginner-guide/

- Connect and configure an agent for Teams and Microsoft 365 Copilot, accessed on July 3, 2025, https://learn.microsoft.com/en-us/microsoft-copilot-studio/publication-add-bot-to-microsoft-teams

- Manage agents for Microsoft 365 Copilot in the Microsoft 365 admin center, accessed on July 3, 2025, https://learn.microsoft.com/en-us/microsoft-365/admin/manage/manage-copilot-agents-integrated-apps?view=o365-worldwide